! pip install numpy keras jax matplotlib

Requirement already satisfied: numpy in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (2.1.3)

Requirement already satisfied: keras in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (3.8.0)

Requirement already satisfied: jax in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (0.5.0)

Requirement already satisfied: matplotlib in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (3.10.0)

Requirement already satisfied: absl-py in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (2.1.0)

Requirement already satisfied: rich in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (13.9.4)

Requirement already satisfied: namex in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (0.0.8)

Requirement already satisfied: h5py in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (3.12.1)

Requirement already satisfied: optree in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (0.14.0)

Requirement already satisfied: ml-dtypes in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (0.5.1)

Requirement already satisfied: packaging in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from keras) (24.2)

Requirement already satisfied: jaxlib<=0.5.0,>=0.5.0 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from jax) (0.5.0)

Requirement already satisfied: opt_einsum in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from jax) (3.4.0)

Requirement already satisfied: scipy>=1.11.1 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from jax) (1.14.1)

Requirement already satisfied: contourpy>=1.0.1 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (1.3.1)

Requirement already satisfied: cycler>=0.10 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (0.12.1)

Requirement already satisfied: fonttools>=4.22.0 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (4.55.8)

Requirement already satisfied: kiwisolver>=1.3.1 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (1.4.8)

Requirement already satisfied: pillow>=8 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (11.1.0)

Requirement already satisfied: pyparsing>=2.3.1 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (3.2.1)

Requirement already satisfied: python-dateutil>=2.7 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from matplotlib) (2.9.0.post0)

Requirement already satisfied: six>=1.5 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from python-dateutil>=2.7->matplotlib) (1.16.0)

Requirement already satisfied: typing-extensions>=4.5.0 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from optree->keras) (4.12.2)

Requirement already satisfied: markdown-it-py>=2.2.0 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from rich->keras) (3.0.0)

Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from rich->keras) (2.19.1)

Requirement already satisfied: mdurl~=0.1 in /home/remi/.pyenv/versions/3.13.0/lib/python3.13/site-packages (from markdown-it-py>=2.2.0->rich->keras) (0.1.2)

[notice] A new release of pip is available: 24.2 -> 25.0

[notice] To update, run: pip install --upgrade pip

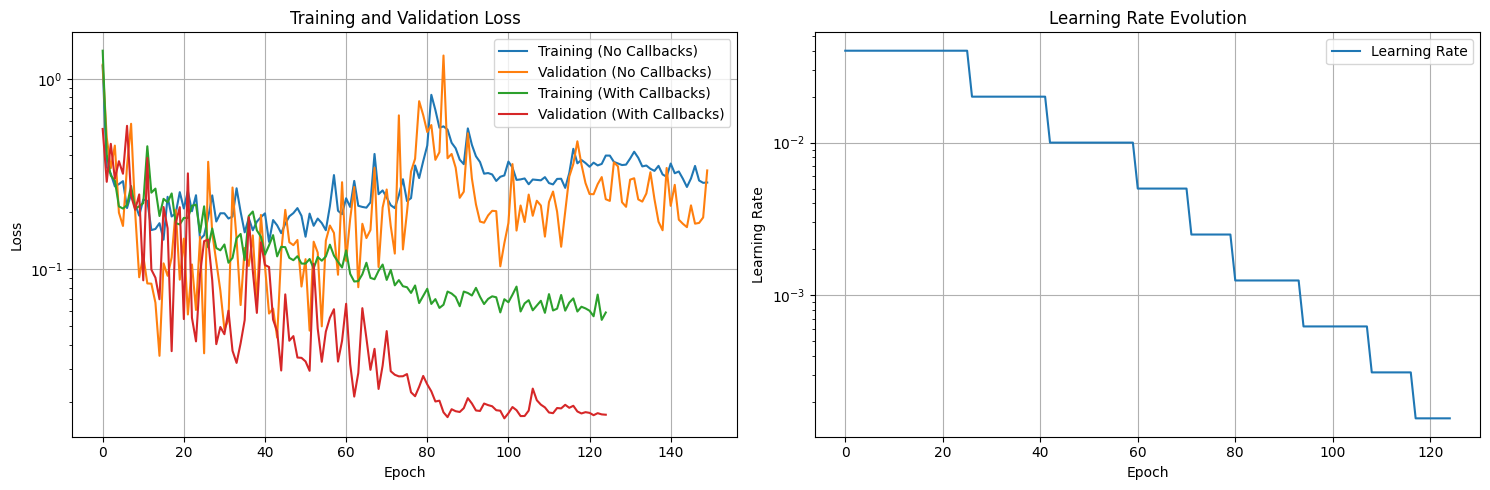

In this TP, we’ll explore how callbacks affect model training using a simple Feynman equation. We’ll visualize the learning process with and without callbacks.

Part 1: Data Generation

First, let’s generate data from a Feynman equation. We’ll use the equation for the period of a pendulum: T = 2π√(L/g)

import os"KERAS_BACKEND" ] = "jax" # Environment variable need to be set prior to importing keras, default is tensorflow import numpy as npimport kerasfrom keras import layersimport matplotlib.pyplot as pltdef generate_complex_data(n_samples= 1000 ):"""Generate data with multiple regimes and non-linear patterns""" = np.random.uniform(- 10 , 10 , (n_samples, 2 ))# Complex target function with multiple regimes = np.zeros(n_samples)# Regime 1: Quadratic = X[:, 0 ] < - 3 = (X[mask1, 0 ]** 2 + X[mask1, 1 ]** 2 ) / 10 # Regime 2: Sinusoidal = (X[:, 0 ] >= - 3 ) & (X[:, 0 ] < 3 )= np.sin(X[mask2, 0 ]) * np.cos(X[mask2, 1 ])# Regime 3: Exponential = X[:, 0 ] >= 3 = np.exp(X[mask3, 0 ]/ 10 ) + X[mask3, 1 ]/ 2 # Add some noise and reshape = y + np.random.normal(0 , 0.1 , n_samples)= y.reshape(- 1 , 1 )return X, y# Generate training and validation data = generate_complex_data(1000 )= generate_complex_data(100 )# Scale the data from sklearn.preprocessing import StandardScaler= StandardScaler()= StandardScaler()= scaler_X.fit_transform(X_train)= scaler_y.fit_transform(y_train)= scaler_X.transform(X_val)= scaler_y.transform(y_val)

Exercise 2: Creating and Training the Base Model

In this exercise, you will create a deep neural network to learn our complex function. We’ll first train it without any callbacks to establish a baseline.

Task 2.1: Model Creation

Create a function create_complex_model() that returns a compiled Keras model with the following architecture:

First block:

Dense layer with 256 units and ReLU activation

BatchNormalization layer

Second block:

Dense layer with 128 units and ReLU activation

Dropout layer with rate 0.3

Third block:

Dense layer with 128 units and ReLU activation

BatchNormalization layer

Fourth block:

Dense layer with 64 units and ReLU activation

Dropout layer with rate 0.3

Final layers:

Dense layer with 32 units and ReLU activation

Output Dense layer with 1 unit (no activation)

Model compilation requirements: - Use Adam optimizer with learning rate 0.04 - Use mean squared error (mse) as loss function

Task 2.2: Model Training

Train the model without any callbacks using these specifications: - Use the scaled training data (X_train_scaled, y_train_scaled) - Train for 200 epochs - Include validation data (X_val_scaled, y_val_scaled) - Set verbose=1 to see progress

# Create and train model = create_complex_model()= model_no_callbacks.fit(# Your code here pass

Verification Steps:

After implementation, verify: 1. Model architecture matches specification exactly:

# Should show exactly 6 Dense layers, 2 BatchNorm, 2 Dropout

Initial learning rate is correct:

print (model_no_callbacks.optimizer.learning_rate.value)# Should print 0.01

Training history contains both training and validation loss:

print (history_no_callbacks.history.keys())# Should print #6520753a-c9b3-4980-a63a-b1e5f1ea2b2b .cell scrolled='true' execution_count=3} - code}

Epoch 1/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 1s 26ms/step - loss: 1.8069 - val_loss: 1.1858

Epoch 2/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3494 - val_loss: 0.4909

Epoch 3/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3519 - val_loss: 0.3395

Epoch 4/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2934 - val_loss: 0.4457

Epoch 5/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3044 - val_loss: 0.1982

Epoch 6/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2638 - val_loss: 0.1686

Epoch 7/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2212 - val_loss: 0.3478

Epoch 8/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1915 - val_loss: 0.5804

Epoch 9/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2120 - val_loss: 0.2001

Epoch 10/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2057 - val_loss: 0.0907

Epoch 11/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2217 - val_loss: 0.1176

Epoch 12/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2171 - val_loss: 0.0841

Epoch 13/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1749 - val_loss: 0.0839

Epoch 14/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1675 - val_loss: 0.0666

Epoch 15/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1785 - val_loss: 0.0351

Epoch 16/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1336 - val_loss: 0.1073

Epoch 17/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2466 - val_loss: 0.0923

Epoch 18/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1774 - val_loss: 0.1152

Epoch 19/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2176 - val_loss: 0.2046

Epoch 20/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2594 - val_loss: 0.0881

Epoch 21/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1958 - val_loss: 0.1447

Epoch 22/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2870 - val_loss: 0.0578

Epoch 23/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1983 - val_loss: 0.1058

Epoch 24/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2553 - val_loss: 0.0611

Epoch 25/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1730 - val_loss: 0.1481

Epoch 26/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1528 - val_loss: 0.0361

Epoch 27/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1918 - val_loss: 0.3665

Epoch 28/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2074 - val_loss: 0.1552

Epoch 29/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1817 - val_loss: 0.1097

Epoch 30/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1739 - val_loss: 0.0772

Epoch 31/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2120 - val_loss: 0.0484

Epoch 32/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1777 - val_loss: 0.0542

Epoch 33/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2015 - val_loss: 0.2685

Epoch 34/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2801 - val_loss: 0.1435

Epoch 35/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2052 - val_loss: 0.0648

Epoch 36/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1547 - val_loss: 0.1291

Epoch 37/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1714 - val_loss: 0.1040

Epoch 38/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1705 - val_loss: 0.1504

Epoch 39/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2001 - val_loss: 0.0665

Epoch 40/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2020 - val_loss: 0.1927

Epoch 41/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2065 - val_loss: 0.1038

Epoch 42/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1491 - val_loss: 0.0584

Epoch 43/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1747 - val_loss: 0.0621

Epoch 44/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1832 - val_loss: 0.0437

Epoch 45/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1450 - val_loss: 0.1226

Epoch 46/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1812 - val_loss: 0.2048

Epoch 47/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2071 - val_loss: 0.1385

Epoch 48/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 990us/step - loss: 0.2062 - val_loss: 0.1337

Epoch 49/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1995 - val_loss: 0.1423

Epoch 50/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1958 - val_loss: 0.0810

Epoch 51/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1478 - val_loss: 0.1128

Epoch 52/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1797 - val_loss: 0.0475

Epoch 53/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1462 - val_loss: 0.1394

Epoch 54/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1817 - val_loss: 0.1222

Epoch 55/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1766 - val_loss: 0.0501

Epoch 56/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1562 - val_loss: 0.1411

Epoch 57/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2126 - val_loss: 0.1695

Epoch 58/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3287 - val_loss: 0.1550

Epoch 59/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2073 - val_loss: 0.0934

Epoch 60/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1852 - val_loss: 0.2863

Epoch 61/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2860 - val_loss: 0.1105

Epoch 62/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2071 - val_loss: 0.1836

Epoch 63/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3188 - val_loss: 0.2702

Epoch 64/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2395 - val_loss: 0.0804

Epoch 65/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2196 - val_loss: 0.1732

Epoch 66/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2142 - val_loss: 0.1457

Epoch 67/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1999 - val_loss: 0.1606

Epoch 68/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3314 - val_loss: 0.3405

Epoch 69/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2986 - val_loss: 0.1027

Epoch 70/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2039 - val_loss: 0.2108

Epoch 71/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2415 - val_loss: 0.2621

Epoch 72/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2228 - val_loss: 0.1684

Epoch 73/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2118 - val_loss: 0.1207

Epoch 74/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2174 - val_loss: 0.6426

Epoch 75/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3596 - val_loss: 0.1270

Epoch 76/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2356 - val_loss: 0.1993

Epoch 77/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2292 - val_loss: 0.3261

Epoch 78/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3298 - val_loss: 0.3800

Epoch 79/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3447 - val_loss: 0.7629

Epoch 80/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3919 - val_loss: 0.6451

Epoch 81/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3420 - val_loss: 0.5252

Epoch 82/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.8493 - val_loss: 0.5717

Epoch 83/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.7178 - val_loss: 0.3753

Epoch 84/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 2ms/step - loss: 0.5318 - val_loss: 0.4116

Epoch 85/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.5546 - val_loss: 1.3251

Epoch 86/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.5027 - val_loss: 0.3825

Epoch 87/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4553 - val_loss: 0.4029

Epoch 88/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4473 - val_loss: 0.3412

Epoch 89/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4051 - val_loss: 0.2370

Epoch 90/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3174 - val_loss: 0.2552

Epoch 91/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4985 - val_loss: 0.5171

Epoch 92/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4756 - val_loss: 0.2995

Epoch 93/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3824 - val_loss: 0.2190

Epoch 94/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 971us/step - loss: 0.3575 - val_loss: 0.1778

Epoch 95/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 979us/step - loss: 0.3116 - val_loss: 0.1754

Epoch 96/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 975us/step - loss: 0.3002 - val_loss: 0.1916

Epoch 97/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3238 - val_loss: 0.2025

Epoch 98/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2929 - val_loss: 0.2017

Epoch 99/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3075 - val_loss: 0.1035

Epoch 100/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 978us/step - loss: 0.2942 - val_loss: 0.1370

Epoch 101/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 974us/step - loss: 0.3668 - val_loss: 0.1746

Epoch 102/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 959us/step - loss: 0.3071 - val_loss: 0.3565

Epoch 103/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 989us/step - loss: 0.3274 - val_loss: 0.1595

Epoch 104/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 984us/step - loss: 0.2742 - val_loss: 0.2160

Epoch 105/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 967us/step - loss: 0.3199 - val_loss: 0.1768

Epoch 106/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2579 - val_loss: 0.2464

Epoch 107/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3095 - val_loss: 0.1907

Epoch 108/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2994 - val_loss: 0.2288

Epoch 109/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2897 - val_loss: 0.2159

Epoch 110/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 961us/step - loss: 0.3269 - val_loss: 0.1482

Epoch 111/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2609 - val_loss: 0.2252

Epoch 112/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 990us/step - loss: 0.2662 - val_loss: 0.2557

Epoch 113/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2702 - val_loss: 0.2005

Epoch 114/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 994us/step - loss: 0.3056 - val_loss: 0.1312

Epoch 115/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 995us/step - loss: 0.2858 - val_loss: 0.2010

Epoch 116/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 982us/step - loss: 0.3060 - val_loss: 0.3064

Epoch 117/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 959us/step - loss: 0.5017 - val_loss: 0.3567

Epoch 118/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3398 - val_loss: 0.4697

Epoch 119/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3795 - val_loss: 0.3551

Epoch 120/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3811 - val_loss: 0.2834

Epoch 121/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3228 - val_loss: 0.2481

Epoch 122/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3381 - val_loss: 0.2475

Epoch 123/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3343 - val_loss: 0.2795

Epoch 124/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3621 - val_loss: 0.3046

Epoch 125/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3825 - val_loss: 0.2330

Epoch 126/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4098 - val_loss: 0.2283

Epoch 127/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3294 - val_loss: 0.3638

Epoch 128/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3789 - val_loss: 0.3468

Epoch 129/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3534 - val_loss: 0.2247

Epoch 130/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3682 - val_loss: 0.2125

Epoch 131/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3950 - val_loss: 0.2952

Epoch 132/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4117 - val_loss: 0.3003

Epoch 133/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4300 - val_loss: 0.2325

Epoch 134/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3468 - val_loss: 0.2264

Epoch 135/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3456 - val_loss: 0.2498

Epoch 136/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3528 - val_loss: 0.3229

Epoch 137/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3590 - val_loss: 0.2331

Epoch 138/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3263 - val_loss: 0.1774

Epoch 139/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3077 - val_loss: 0.1601

Epoch 140/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2976 - val_loss: 0.3400

Epoch 141/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3300 - val_loss: 0.2151

Epoch 142/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3186 - val_loss: 0.2773

Epoch 143/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3404 - val_loss: 0.1820

Epoch 144/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3141 - val_loss: 0.1731

Epoch 145/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2783 - val_loss: 0.1662

Epoch 146/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2925 - val_loss: 0.2164

Epoch 147/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3581 - val_loss: 0.1734

Epoch 148/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3279 - val_loss: 0.1749

Epoch 149/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2854 - val_loss: 0.1869

Epoch 150/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2905 - val_loss: 0.3301

:::

Part 3: Model With Callbacks

Exercise: Implementing Training Callbacks

Now that we’ve trained a model without callbacks, let’s add training control mechanisms. We want to: 1. Stop training when the model stops improving 2. Reduce the learning rate when performance plateaus 3. Track the learning rate changes during training

Complete the following tasks:

Create a custom callback class named LRHistory that will store learning rates during training:

Initialize an empty list in on_train_begin

Store the current learning rate in on_epoch_end

Important : Make sure to convert the learning rate to float to store its actual value, not just a reference

Set up the following callbacks list:

Early stopping that:

Monitors validation loss

Has patience of 25 epochs

Restores the best weights when triggered

Learning rate reduction that:

Monitors validation loss

Reduces learning rate by half when triggered

Has patience of 9 epochs

Won’t go below 1e-6

Your LRHistory callback instance

Train the model for 200 epochs using these callbacks.

The goal is to produce a model that automatically stops when necessary and adapts its learning rate, while keeping track of these learning rate changes.

Later, we’ll use the stored learning rates to understand how the training process evolved.

Epoch 1/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 1s 24ms/step - loss: 2.4815 - val_loss: 0.5451 - learning_rate: 0.0400

Epoch 2/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4268 - val_loss: 0.2876 - learning_rate: 0.0400

Epoch 3/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3283 - val_loss: 0.4554 - learning_rate: 0.0400

Epoch 4/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2647 - val_loss: 0.2985 - learning_rate: 0.0400

Epoch 5/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 983us/step - loss: 0.2216 - val_loss: 0.3691 - learning_rate: 0.0400

Epoch 6/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2371 - val_loss: 0.3170 - learning_rate: 0.0400

Epoch 7/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2015 - val_loss: 0.5661 - learning_rate: 0.0400

Epoch 8/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.3314 - val_loss: 0.2429 - learning_rate: 0.0400

Epoch 9/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2033 - val_loss: 0.2051 - learning_rate: 0.0400

Epoch 10/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2206 - val_loss: 0.2469 - learning_rate: 0.0400

Epoch 11/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2272 - val_loss: 0.0874 - learning_rate: 0.0400

Epoch 12/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.4146 - val_loss: 0.3839 - learning_rate: 0.0400

Epoch 13/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2886 - val_loss: 0.0994 - learning_rate: 0.0400

Epoch 14/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2536 - val_loss: 0.0902 - learning_rate: 0.0400

Epoch 15/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2025 - val_loss: 0.0695 - learning_rate: 0.0400

Epoch 16/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2345 - val_loss: 0.2120 - learning_rate: 0.0400

Epoch 17/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2332 - val_loss: 0.1640 - learning_rate: 0.0400

Epoch 18/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2677 - val_loss: 0.0371 - learning_rate: 0.0400

Epoch 19/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 978us/step - loss: 0.2027 - val_loss: 0.1747 - learning_rate: 0.0400

Epoch 20/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 955us/step - loss: 0.2271 - val_loss: 0.2116 - learning_rate: 0.0400

Epoch 21/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 989us/step - loss: 0.1896 - val_loss: 0.0547 - learning_rate: 0.0400

Epoch 22/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 996us/step - loss: 0.1822 - val_loss: 0.3186 - learning_rate: 0.0400

Epoch 23/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 969us/step - loss: 0.1836 - val_loss: 0.0556 - learning_rate: 0.0400

Epoch 24/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 982us/step - loss: 0.2187 - val_loss: 0.0418 - learning_rate: 0.0400

Epoch 25/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 997us/step - loss: 0.1404 - val_loss: 0.0945 - learning_rate: 0.0400

Epoch 26/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 981us/step - loss: 0.2349 - val_loss: 0.1403 - learning_rate: 0.0400

Epoch 27/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 969us/step - loss: 0.1471 - val_loss: 0.1429 - learning_rate: 0.0400

Epoch 28/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 999us/step - loss: 0.1769 - val_loss: 0.0874 - learning_rate: 0.0200

Epoch 29/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1187 - val_loss: 0.0404 - learning_rate: 0.0200

Epoch 30/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1244 - val_loss: 0.0497 - learning_rate: 0.0200

Epoch 31/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1289 - val_loss: 0.0455 - learning_rate: 0.0200

Epoch 32/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1110 - val_loss: 0.0605 - learning_rate: 0.0200

Epoch 33/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1200 - val_loss: 0.0373 - learning_rate: 0.0200

Epoch 34/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1617 - val_loss: 0.0322 - learning_rate: 0.0200

Epoch 35/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1341 - val_loss: 0.0408 - learning_rate: 0.0200

Epoch 36/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1140 - val_loss: 0.0540 - learning_rate: 0.0200

Epoch 37/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1909 - val_loss: 0.1876 - learning_rate: 0.0200

Epoch 38/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.2075 - val_loss: 0.0981 - learning_rate: 0.0200

Epoch 39/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1716 - val_loss: 0.0589 - learning_rate: 0.0200

Epoch 40/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1337 - val_loss: 0.1384 - learning_rate: 0.0200

Epoch 41/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1283 - val_loss: 0.1052 - learning_rate: 0.0200

Epoch 42/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1293 - val_loss: 0.1026 - learning_rate: 0.0200

Epoch 43/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1470 - val_loss: 0.0543 - learning_rate: 0.0200

Epoch 44/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1146 - val_loss: 0.0471 - learning_rate: 0.0100

Epoch 45/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1105 - val_loss: 0.0294 - learning_rate: 0.0100

Epoch 46/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1237 - val_loss: 0.0737 - learning_rate: 0.0100

Epoch 47/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1256 - val_loss: 0.0421 - learning_rate: 0.0100

Epoch 48/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1198 - val_loss: 0.0445 - learning_rate: 0.0100

Epoch 49/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1114 - val_loss: 0.0343 - learning_rate: 0.0100

Epoch 50/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1291 - val_loss: 0.0342 - learning_rate: 0.0100

Epoch 51/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0979 - val_loss: 0.0328 - learning_rate: 0.0100

Epoch 52/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1052 - val_loss: 0.0292 - learning_rate: 0.0100

Epoch 53/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0960 - val_loss: 0.1068 - learning_rate: 0.0100

Epoch 54/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1172 - val_loss: 0.0487 - learning_rate: 0.0100

Epoch 55/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1158 - val_loss: 0.0326 - learning_rate: 0.0100

Epoch 56/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1277 - val_loss: 0.0470 - learning_rate: 0.0100

Epoch 57/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1392 - val_loss: 0.0555 - learning_rate: 0.0100

Epoch 58/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1399 - val_loss: 0.0616 - learning_rate: 0.0100

Epoch 59/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1121 - val_loss: 0.0327 - learning_rate: 0.0100

Epoch 60/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1046 - val_loss: 0.0421 - learning_rate: 0.0100

Epoch 61/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1283 - val_loss: 0.0658 - learning_rate: 0.0100

Epoch 62/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1011 - val_loss: 0.0317 - learning_rate: 0.0050

Epoch 63/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0957 - val_loss: 0.0214 - learning_rate: 0.0050

Epoch 64/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0791 - val_loss: 0.0285 - learning_rate: 0.0050

Epoch 65/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0887 - val_loss: 0.0624 - learning_rate: 0.0050

Epoch 66/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1157 - val_loss: 0.0434 - learning_rate: 0.0050

Epoch 67/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0905 - val_loss: 0.0296 - learning_rate: 0.0050

Epoch 68/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0848 - val_loss: 0.0381 - learning_rate: 0.0050

Epoch 69/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1055 - val_loss: 0.0235 - learning_rate: 0.0050

Epoch 70/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1212 - val_loss: 0.0312 - learning_rate: 0.0050

Epoch 71/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0874 - val_loss: 0.0473 - learning_rate: 0.0050

Epoch 72/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.1037 - val_loss: 0.0291 - learning_rate: 0.0050

Epoch 73/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0703 - val_loss: 0.0279 - learning_rate: 0.0025

Epoch 74/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0862 - val_loss: 0.0273 - learning_rate: 0.0025

Epoch 75/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0790 - val_loss: 0.0274 - learning_rate: 0.0025

Epoch 76/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0753 - val_loss: 0.0281 - learning_rate: 0.0025

Epoch 77/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0773 - val_loss: 0.0226 - learning_rate: 0.0025

Epoch 78/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0720 - val_loss: 0.0215 - learning_rate: 0.0025

Epoch 79/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0677 - val_loss: 0.0241 - learning_rate: 0.0025

Epoch 80/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0726 - val_loss: 0.0275 - learning_rate: 0.0025

Epoch 81/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0791 - val_loss: 0.0248 - learning_rate: 0.0025

Epoch 82/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0624 - val_loss: 0.0228 - learning_rate: 0.0012

Epoch 83/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0590 - val_loss: 0.0202 - learning_rate: 0.0012

Epoch 84/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0590 - val_loss: 0.0204 - learning_rate: 0.0012

Epoch 85/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0644 - val_loss: 0.0177 - learning_rate: 0.0012

Epoch 86/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0729 - val_loss: 0.0167 - learning_rate: 0.0012

Epoch 87/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0718 - val_loss: 0.0184 - learning_rate: 0.0012

Epoch 88/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0736 - val_loss: 0.0179 - learning_rate: 0.0012

Epoch 89/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0625 - val_loss: 0.0178 - learning_rate: 0.0012

Epoch 90/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0738 - val_loss: 0.0186 - learning_rate: 0.0012

Epoch 91/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0720 - val_loss: 0.0210 - learning_rate: 0.0012

Epoch 92/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0695 - val_loss: 0.0197 - learning_rate: 0.0012

Epoch 93/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0698 - val_loss: 0.0181 - learning_rate: 0.0012

Epoch 94/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0720 - val_loss: 0.0180 - learning_rate: 0.0012

Epoch 95/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0705 - val_loss: 0.0197 - learning_rate: 0.0012

Epoch 96/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0691 - val_loss: 0.0193 - learning_rate: 6.2500e-04

Epoch 97/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0673 - val_loss: 0.0190 - learning_rate: 6.2500e-04

Epoch 98/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0784 - val_loss: 0.0182 - learning_rate: 6.2500e-04

Epoch 99/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0580 - val_loss: 0.0180 - learning_rate: 6.2500e-04

Epoch 100/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0654 - val_loss: 0.0165 - learning_rate: 6.2500e-04

Epoch 101/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0695 - val_loss: 0.0175 - learning_rate: 6.2500e-04

Epoch 102/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0733 - val_loss: 0.0189 - learning_rate: 6.2500e-04

Epoch 103/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0826 - val_loss: 0.0182 - learning_rate: 6.2500e-04

Epoch 104/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0550 - val_loss: 0.0169 - learning_rate: 6.2500e-04

Epoch 105/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0613 - val_loss: 0.0169 - learning_rate: 6.2500e-04

Epoch 106/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0579 - val_loss: 0.0181 - learning_rate: 6.2500e-04

Epoch 107/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0630 - val_loss: 0.0236 - learning_rate: 6.2500e-04

Epoch 108/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0693 - val_loss: 0.0205 - learning_rate: 6.2500e-04

Epoch 109/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0670 - val_loss: 0.0194 - learning_rate: 6.2500e-04

Epoch 110/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0579 - val_loss: 0.0188 - learning_rate: 3.1250e-04

Epoch 111/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0855 - val_loss: 0.0177 - learning_rate: 3.1250e-04

Epoch 112/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0601 - val_loss: 0.0175 - learning_rate: 3.1250e-04

Epoch 113/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0647 - val_loss: 0.0186 - learning_rate: 3.1250e-04

Epoch 114/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0725 - val_loss: 0.0186 - learning_rate: 3.1250e-04

Epoch 115/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0653 - val_loss: 0.0194 - learning_rate: 3.1250e-04

Epoch 116/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0607 - val_loss: 0.0187 - learning_rate: 3.1250e-04

Epoch 117/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0632 - val_loss: 0.0192 - learning_rate: 3.1250e-04

Epoch 118/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 938us/step - loss: 0.0608 - val_loss: 0.0179 - learning_rate: 3.1250e-04

Epoch 119/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 931us/step - loss: 0.0578 - val_loss: 0.0175 - learning_rate: 1.5625e-04

Epoch 120/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 940us/step - loss: 0.0674 - val_loss: 0.0177 - learning_rate: 1.5625e-04

Epoch 121/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 927us/step - loss: 0.0538 - val_loss: 0.0176 - learning_rate: 1.5625e-04

Epoch 122/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 949us/step - loss: 0.0530 - val_loss: 0.0171 - learning_rate: 1.5625e-04

Epoch 123/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 967us/step - loss: 0.0612 - val_loss: 0.0175 - learning_rate: 1.5625e-04

Epoch 124/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0505 - val_loss: 0.0173 - learning_rate: 1.5625e-04

Epoch 125/150

32/32 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step - loss: 0.0533 - val_loss: 0.0172 - learning_rate: 1.5625e-04

Part 4: Visualization and Analysis

Let’s compare the training processes:

Part 5: Model Comparison

Let’s compare the final performance:

63/63 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step

63/63 ━━━━━━━━━━━━━━━━━━━━ 0s 1ms/step

MSE without callbacks: 5.390879

MSE with callbacks: 0.631188